GM,

Last week, OpenAI unveiled the video generation model Sora, marking a shift from AI-generated content in text and images to the era of videos. Sora has the capability to produce high-quality videos in just a few seconds based on textual descriptions. It's not difficult to envision professionals in various fields using Sora to explain abstract concepts in the future, but it also raises concerns about potential misuse.

The ability to distinguish between reality and fiction in the digital world is crucial. However, the currently available tools are quite limited. People find it challenging to determine whether what they see is generated by AI. Relying solely on the naked eye or intuition is akin to trying to catch a train by running.

The main theme of this article, C2PA, claims to be the "resume of digital content," helping people trace the origin of content and discern the authenticity of information. It has already become a standard adopted by OpenAI, Google, and Microsoft.

Undistinguishable

Many have heard stories where elderly family members receive a call from an unknown number, hearing someone crying on the other side, seemingly their own child. In a panic, the elderly rush to send money, wanting to help their child in distress, only to later realize they have been deceived.

Creating a person's voice out of thin air is quite easy. For example, Respeecher specializes in transforming voices based on input audio, effectively making it sound like someone else is speaking your words, even in a different language. The video below transforms the voice of an English-speaking male into that of a French-speaking female. If Blocktrend were to use such a tool to introduce a female voice reading service, it could potentially lead people to believe that Blocktrend's author is female.

Voice is more easily trusted than text, and adding visuals can further enhance credibility. Whether it's audio or video, technology now allows for the realistic fabrication of content out of thin air. OpenAI refers to Sora as a simulator of the physical world, capable of creating lifelike scenes according to user instructions while adhering to the laws of physics. In the video below depicting the California Gold Rush in the 19th century, one might not easily discern that it was generated by Sora unless explicitly mentioned. After all, isn't this how documentaries typically look?

Faced with a vast amount of AI-generated content, people can no longer rely on their senses to distinguish between reality and fiction, as if overturning thousands of years of human evolution. Although Respeecher prohibits users from simulating the voice of the U.S. president and OpenAI refrains from facilitating deceptive practices, top-down control ultimately has its limitations. C2PA takes a different approach—it does not actively judge the authenticity of content but instead adds a "production resume" to digital content.

Production Resume

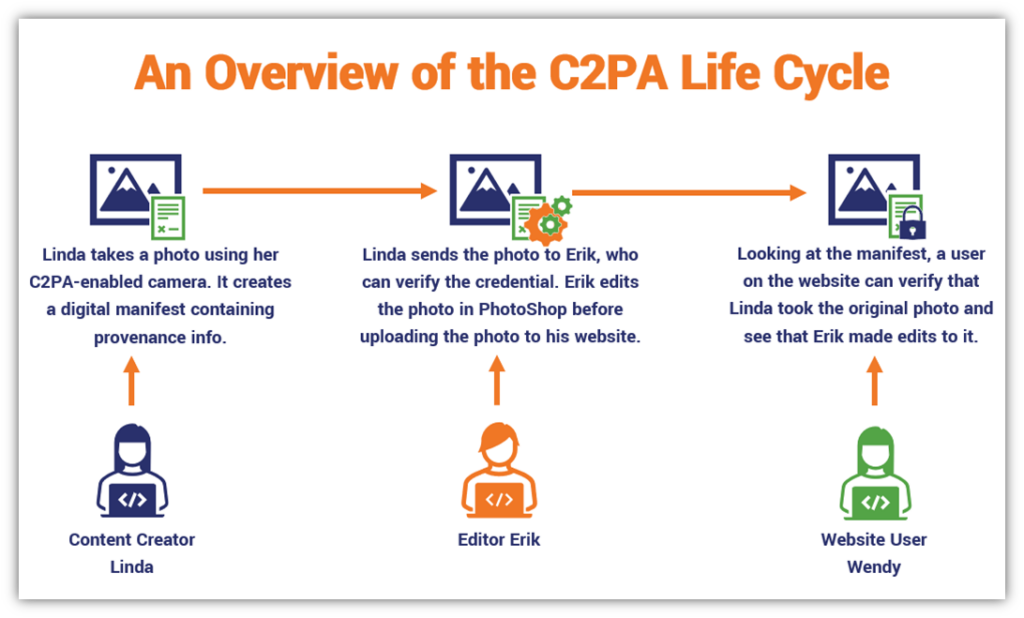

C2PA stands for the Coalition for Content Provenance and Authenticity, led by Adobe with thousands of companies currently participating. The alliance's simple goal is to encourage people to pay attention to the "production resume" of digital content before consuming it. According to OpenAI:

C2PA is an open technical standard that allows companies, media, or others to embed traceable metadata in audiovisual content. C2PA is not only applicable to AI-generated images; camera manufacturers and news organizations can also adopt the same standard to provide a history for audiovisual content ... People can use the Content Credentials verify website to check if an image was generated using OpenAI's tools ... unless the metadata has been deleted.

Taking practical action is the quickest way. Below is an image I casually generated using DALL·E. By simply throwing this image into the Content Credentials website for verification, you can discover the creator of the image, the production time, and the modification history.

These contents seem familiar, don't they? The detailed information section of mobile phone photos is essentially recording similar things, right? The photo's metadata includes when it was taken, the location, the device used, and even details like aperture size and applied filters are meticulously documented. These are the metadata of a photo. If the time or location is incorrect, it can be manually adjusted.

C2PA is also the metadata of audiovisual content, but users cannot modify the recorded information. If in the future social media platforms and search engines support displaying C2PA information, then people watching digital content online would be akin to shopping in a supermarket. If the product packaging is intact and comes with a production resume, consumers will feel that the product can withstand scrutiny. On the contrary, if the product is sold in bulk, without packaging or labeling, it will raise suspicions among consumers.

However, C2PA is currently in the advocacy stage. Despite receiving responses from numerous companies, it seems more like symbolic support. There are still not many companies that have actually integrated C2PA into their products. For C2PA to be widely adopted, its fate relies on the attitudes of media, social platforms, and instant messaging software.

Recently, companies like Sony, Nikon, and Leica have announced the launch of cameras integrated with C2PA. This move aims to provide photographers' works with a traceable resume, adding value to their creations. However, even if photographers are willing to invest in a new camera that supports C2PA, the data saved by the camera becomes useless if the photos are compressed upon upload to social platforms.

Social platforms and instant messaging software are not fond of large files. Fortunately, data released by OpenAI indicates that after adding C2PA metadata, the increase in file size is minimal, and users hardly notice the difference. However, C2PA is not a panacea. Even if all companies are willing to support C2PA to ensure the completeness of file resumes, there are still many practical issues to be addressed.

Practical Issues

Renowned cryptographer and Stanford University professor Dan Boneh highlighted various methods to crack C2PA in a lecture, providing an eye-opening perspective.

Boneh pointed out that the new cameras supporting C2PA actually use a public-private key mechanism to sign photos, ensuring that metadata cannot be tampered with by third parties. However, the use of a private key comes with the risk of leakage. If the camera's private key is compromised, anyone can use it to sign their photos, causing chaos for C2PA.

Even if the private key is not leaked, there are still risks. For example, a forger can prepare an ultra-high-resolution screen, open someone else's photo, and then use a camera supporting C2PA to take a picture of the screen. Ideally, the photographed image should be the same as the original photo, and both images would have C2PA certification. This creates another challenge of distinguishing between real and fake. Furthermore, if a photo editor uses malicious software to alter the history records of C2PA, it is equivalent to undermining the entire digital trust chain.

In response, Dan Boneh proposed a mechanism based on zero-knowledge proofs (zk-SNARK) to ensure that photos cannot be maliciously tampered with. Taiwan's Web3 startup, Numbers, advocates for recording the history data of C2PA on the blockchain rather than in the file itself. As long as each modification leaves a record on the chain, the data will not be tampered with midway.

Blocktrend has been discussing since 2020 how to use the blockchain to ensure the authenticity of content. At that time, examples were limited, and many people didn't see much of an issue with media using old photos for news. Today, as the threshold for creating content out of thin air has lowered, people are gradually realizing the value of authenticity. While discussions about C2PA on the internet are still limited, with more and more companies joining, it is expected to become an industry standard for the production resume of audiovisual content.

Blocktrend is an independent media platform sustained by reader subscription fees. If you find Blocktrend's articles valuable, we welcome you to share this piece. You can also join discussions on our member-created Discord or collect the Writing NFT to include this article in your Web3 records.

Furthermore, please consider recommending Blocktrend to your friends and family. If you successfully refer a friend who subscribes, you'll receive a complimentary one-month extension of your membership. You can find past issues in the article list. As readers often inquire about referral codes, I have compiled them on a dedicated page for your convenience. Feel free to make use of them.